diff --git a/.dockerignore b/.dockerignore

index 986bebd047..b25d2d9ae8 100644

--- a/.dockerignore

+++ b/.dockerignore

@@ -1,6 +1,4 @@

node_modules

-bower_components

hass_frontend

-build

-build-temp

+hass_frontend_es5

.git

diff --git a/README.md b/README.md

index 9226a5ac60..d59eb6deda 100644

--- a/README.md

+++ b/README.md

@@ -1,16 +1,11 @@

# Home Assistant Polymer [](https://travis-ci.org/home-assistant/home-assistant-polymer)

-This is the repository for the official [Home Assistant](https://home-assistant.io) frontend. The frontend is built on top of the following technologies:

-

- * [Websockets](https://developer.mozilla.org/en-US/docs/Web/API/WebSockets_API)

- * [Polymer](https://www.polymer-project.org/)

- * [Rollup](http://rollupjs.org/) to package Home Assistant JS

- * [Bower](https://bower.io) for Polymer package management

+This is the repository for the official [Home Assistant](https://home-assistant.io) frontend.

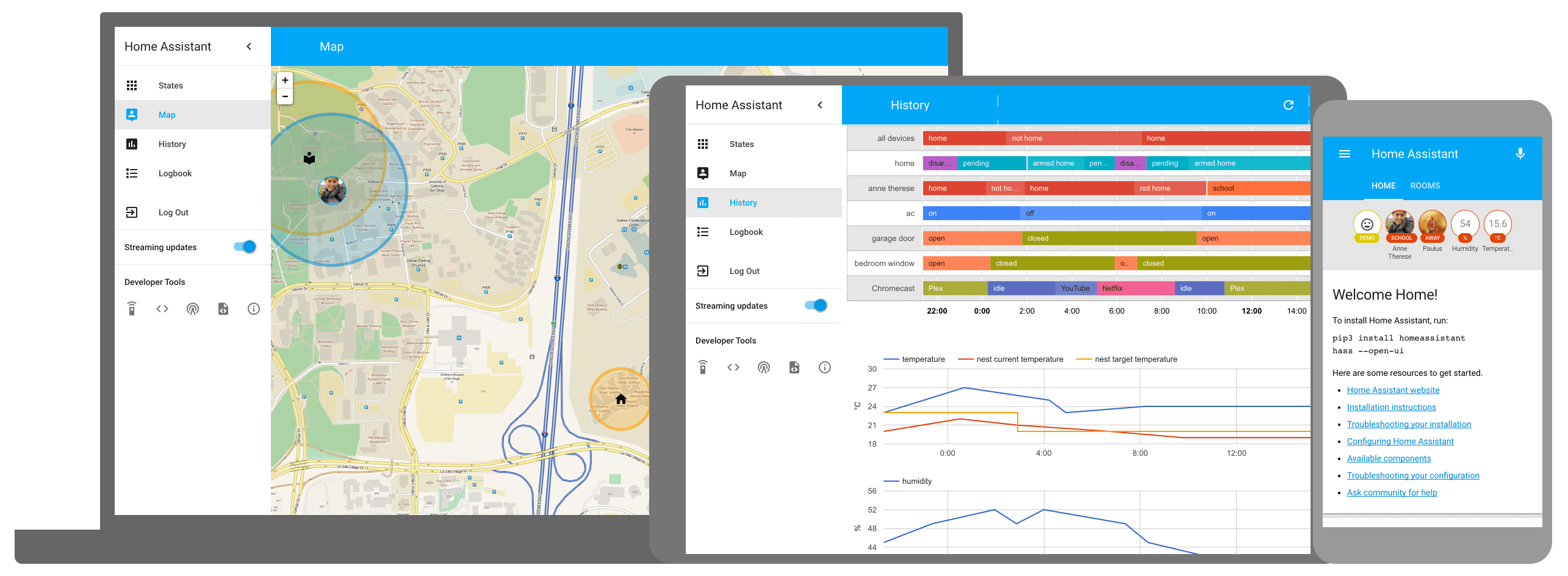

[](https://home-assistant.io/demo/)

-[View demo of the Polymer frontend](https://home-assistant.io/demo/)

-[More information about Home Assistant](https://home-assistant.io)

+[View demo of the Polymer frontend](https://home-assistant.io/demo/)

+[More information about Home Assistant](https://home-assistant.io)

[Frontend development instructions](https://home-assistant.io/developers/frontend/)

## License

diff --git a/gulp/common/html.js b/gulp/common/html.js

deleted file mode 100644

index 3ff65a0198..0000000000

--- a/gulp/common/html.js

+++ /dev/null

@@ -1,57 +0,0 @@

-const {

- Analyzer,

- FSUrlLoader

-} = require('polymer-analyzer');

-

-const Bundler = require('polymer-bundler').Bundler;

-const parse5 = require('parse5');

-

-const { streamFromString } = require('./stream');

-

-// Bundle an HTML file and convert it to a stream

-async function bundledStreamFromHTML(path, bundlerOptions = {}) {

- const bundler = new Bundler(bundlerOptions);

- const manifest = await bundler.generateManifest([path]);

- const result = await bundler.bundle(manifest);

- return streamFromString(path, parse5.serialize(result.documents.get(path).ast));

-}

-

-async function analyze(root, paths) {

- const analyzer = new Analyzer({

- urlLoader: new FSUrlLoader(root),

- });

- return analyzer.analyze(paths);

-}

-

-async function findDependencies(root, element) {

- const deps = new Set();

-

- async function resolve(files) {

- const analysis = await analyze(root, files);

- const toResolve = [];

-

- for (const file of files) {

- const doc = analysis.getDocument(file);

-

- for (const importEl of doc.getFeatures({ kind: 'import' })) {

- const url = importEl.url;

- if (!deps.has(url)) {

- deps.add(url);

- toResolve.push(url);

- }

- }

- }

-

- if (toResolve.length > 0) {

- return resolve(toResolve);

- }

- }

-

- await resolve([element]);

- return deps;

-}

-

-module.exports = {

- bundledStreamFromHTML,

- findDependencies,

-};

diff --git a/gulp/common/strategy.js b/gulp/common/strategy.js

deleted file mode 100644

index 705eaac79f..0000000000

--- a/gulp/common/strategy.js

+++ /dev/null

@@ -1,33 +0,0 @@

-/**

- * Polymer build strategy to strip imports, even if explictely imported

- */

-module.exports.stripImportsStrategy = function (urls) {

- return (bundles) => {

- for (const bundle of bundles) {

- for (const url of urls) {

- bundle.stripImports.add(url);

- }

- }

- return bundles;

- };

-};

-

-/**

- * Polymer build strategy to strip everything but the entrypoints

- * for bundles that match a specific entry point.

- */

-module.exports.stripAllButEntrypointStrategy = function (entryPoint) {

- return (bundles) => {

- for (const bundle of bundles) {

- if (bundle.entrypoints.size === 1 &&

- bundle.entrypoints.has(entryPoint)) {

- for (const file of bundle.files) {

- if (!bundle.entrypoints.has(file)) {

- bundle.stripImports.add(file);

- }

- }

- }

- }

- return bundles;

- };

-};

diff --git a/gulp/common/stream.js b/gulp/common/stream.js

deleted file mode 100644

index b0bf9d5f73..0000000000

--- a/gulp/common/stream.js

+++ /dev/null

@@ -1,21 +0,0 @@

-const stream = require('stream');

-

-const gutil = require('gulp-util');

-

-function streamFromString(filename, string) {

- var src = stream.Readable({ objectMode: true });

- src._read = function () {

- this.push(new gutil.File({

- cwd: '',

- base: '',

- path: filename,

- contents: Buffer.from(string)

- }));

- this.push(null);

- };

- return src;

-}

-

-module.exports = {

- streamFromString,

-};

diff --git a/gulp/common/transform.js b/gulp/common/transform.js

index a5871ea848..fae337ff15 100644

--- a/gulp/common/transform.js

+++ b/gulp/common/transform.js

@@ -1,11 +1,12 @@

const gulpif = require('gulp-if');

const babel = require('gulp-babel');

-const uglify = require('./gulp-uglify.js');

const { gulp: cssSlam } = require('css-slam');

const htmlMinifier = require('gulp-html-minifier');

const { HtmlSplitter } = require('polymer-build');

const pump = require('pump');

+const uglify = require('./gulp-uglify.js');

+

module.exports.minifyStream = function (stream, es6) {

const sourcesHtmlSplitter = new HtmlSplitter();

return pump([

diff --git a/gulp/tasks/auth.js b/gulp/tasks/auth.js

deleted file mode 100644

index 6d39fe30c8..0000000000

--- a/gulp/tasks/auth.js

+++ /dev/null

@@ -1,28 +0,0 @@

-const gulp = require('gulp');

-const path = require('path');

-const replace = require('gulp-batch-replace');

-const rename = require('gulp-rename');

-

-const config = require('../config');

-const minifyStream = require('../common/transform').minifyStream;

-const {

- bundledStreamFromHTML,

-} = require('../common/html');

-

-const es5Extra = "";

-

-async function buildAuth(es6) {

- const frontendPath = es6 ? 'frontend_latest' : 'frontend_es5';

- const stream = gulp.src(path.resolve(config.polymer_dir, 'src/authorize.html'))

- .pipe(replace([

- ['', es6 ? '' : es5Extra],

- ['/home-assistant-polymer/hass_frontend/authorize.js', `/${frontendPath}/authorize.js`],

- ]));

-

- return minifyStream(stream, /* es6= */ es6)

- .pipe(rename('authorize.html'))

- .pipe(gulp.dest(es6 ? config.output : config.output_es5));

-}

-

-gulp.task('authorize-es5', () => buildAuth(/* es6= */ false));

-gulp.task('authorize', () => buildAuth(/* es6= */ true));

diff --git a/gulp/tasks/gen-authorize-html.js b/gulp/tasks/gen-authorize-html.js

new file mode 100644

index 0000000000..3a25dde429

--- /dev/null

+++ b/gulp/tasks/gen-authorize-html.js

@@ -0,0 +1,43 @@

+const gulp = require('gulp');

+const path = require('path');

+const replace = require('gulp-batch-replace');

+const rename = require('gulp-rename');

+const md5 = require('../common/md5');

+const url = require('url');

+

+const config = require('../config');

+const minifyStream = require('../common/transform').minifyStream;

+

+const buildReplaces = {

+ '/frontend_latest/authorize.js': 'authorize.js',

+};

+

+const es5Extra = "";

+

+async function buildAuth(es6) {

+ const targetPath = es6 ? config.output : config.output_es5;

+ const targetUrl = es6 ? '/frontend_latest/' : '/frontend_es5/';

+ const frontendPath = es6 ? 'frontend_latest' : 'frontend_es5';

+ const toReplace = [

+ ['', es6 ? '' : es5Extra],

+ ['/home-assistant-polymer/hass_frontend/authorize.js', `/${frontendPath}/authorize.js`],

+ ];

+

+ for (const [replaceSearch, filename] of Object.entries(buildReplaces)) {

+ const parsed = path.parse(filename);

+ const hash = md5(path.resolve(targetPath, filename));

+ toReplace.push([

+ replaceSearch,

+ url.resolve(targetUrl, `${parsed.name}-${hash}${parsed.ext}`)]);

+ }

+

+ const stream = gulp.src(path.resolve(config.polymer_dir, 'src/authorize.html'))

+ .pipe(replace(toReplace));

+

+ return minifyStream(stream, /* es6= */ es6)

+ .pipe(rename('authorize.html'))

+ .pipe(gulp.dest(es6 ? config.output : config.output_es5));

+}

+

+gulp.task('gen-authorize-html-es5', () => buildAuth(/* es6= */ false));

+gulp.task('gen-authorize-html', () => buildAuth(/* es6= */ true));

diff --git a/gulp/tasks/gen-index-html.js b/gulp/tasks/gen-index-html.js

index 1b65979d6b..4365d5c8fe 100644

--- a/gulp/tasks/gen-index-html.js

+++ b/gulp/tasks/gen-index-html.js

@@ -7,8 +7,8 @@ const md5 = require('../common/md5');

const { minifyStream } = require('../common/transform');

const buildReplaces = {

- '/home-assistant-polymer/hass_frontend/core.js': 'core.js',

- '/home-assistant-polymer/hass_frontend/app.js': 'app.js',

+ '/frontend_latest/core.js': 'core.js',

+ '/frontend_latest/app.js': 'app.js',

};

function generateIndex(es6) {

@@ -18,7 +18,7 @@ function generateIndex(es6) {

const toReplace = [

// Needs to look like a color during CSS minifiaction

['{{ theme_color }}', '#THEME'],

- ['/home-assistant-polymer/hass_frontend/mdi.html',

+ ['/static/mdi.html',

`/static/mdi-${md5(path.resolve(config.output, 'mdi.html'))}.html`],

];

diff --git a/hassio/webpack.config.js b/hassio/webpack.config.js

index 4bc2abf15b..0566c1eae2 100644

--- a/hassio/webpack.config.js

+++ b/hassio/webpack.config.js

@@ -1,6 +1,7 @@

const fs = require('fs');

const path = require('path');

const webpack = require('webpack');

+const UglifyJsPlugin = require('uglifyjs-webpack-plugin');

const config = require('./config.js');

const version = fs.readFileSync('../setup.py', 'utf8').match(/\d{8}[^']*/);

@@ -12,8 +13,27 @@ const isProdBuild = process.env.NODE_ENV === 'production'

const chunkFilename = isProdBuild ?

'[name]-[chunkhash].chunk.js' : '[name].chunk.js';

+const plugins = [

+ new webpack.DefinePlugin({

+ __DEV__: JSON.stringify(!isProdBuild),

+ __VERSION__: JSON.stringify(VERSION),

+ })

+];

+

+if (isProdBuild) {

+ plugins.push(new UglifyJsPlugin({

+ extractComments: true,

+ sourceMap: true,

+ uglifyOptions: {

+ // Disabling because it broke output

+ mangle: false,

+ }

+ }));

+}

+

module.exports = {

mode: isProdBuild ? 'production' : 'development',

+ devtool: isProdBuild ? 'source-map ' : 'inline-source-map',

entry: {

app: './src/hassio-app.js',

},

@@ -36,16 +56,11 @@ module.exports = {

}

]

},

- plugins: [

- new webpack.DefinePlugin({

- __DEV__: JSON.stringify(!isProdBuild),

- __VERSION__: JSON.stringify(VERSION),

- })

- ],

+ plugins,

output: {

filename: '[name].js',

chunkFilename: chunkFilename,

path: config.buildDir,

- publicPath: config.publicPath,

+ publicPath: `${config.publicPath}/`,

}

};

diff --git a/index.html b/index.html

index a9604b7589..8fddf57ebb 100644

--- a/index.html

+++ b/index.html

@@ -9,7 +9,7 @@

-

+

@@ -82,28 +82,24 @@

-

-

-

+

+

+

{% for extra_url in extra_urls -%}

{% endfor -%}

diff --git a/package.json b/package.json

index 29fcc3518e..8a1fa1ad09 100644

--- a/package.json

+++ b/package.json

@@ -137,6 +137,7 @@

"sw-precache": "^5.2.0",

"uglify-es": "^3.1.9",

"uglify-js": "^3.1.9",

+ "uglifyjs-webpack-plugin": "^1.2.5",

"wct-browser-legacy": "^1.0.0",

"web-component-tester": "^6.6.0",

"webpack": "^4.8.1",

diff --git a/polymer.json b/polymer.json

index 84d9616c77..74058afa2e 100644

--- a/polymer.json

+++ b/polymer.json

@@ -1,6 +1,6 @@

{

"entrypoint": "index.html",

- "shell": "src/home-assistant.js",

+ "shell": "src/entrypoints/app.js",

"fragments": [

"src/panels/config/ha-panel-config.js",

"src/panels/dev-event/ha-panel-dev-event.js",

diff --git a/public/markdown-js.html b/public/markdown-js.html

deleted file mode 100644

index ca0a2a2555..0000000000

--- a/public/markdown-js.html

+++ /dev/null

@@ -1,12 +0,0 @@

-

diff --git a/script/build_frontend b/script/build_frontend

index 9d775870ba..bcff9d176f 100755

--- a/script/build_frontend

+++ b/script/build_frontend

@@ -15,24 +15,22 @@ mkdir $OUTPUT_DIR_ES5

cp -r public/__init__.py $OUTPUT_DIR_ES5/

# Build frontend

-BUILD_DEV=0 ./node_modules/.bin/gulp build-translations authorize authorize-es5

-NODE_ENV=production ./node_modules/.bin/webpack -p

+BUILD_DEV=0 ./node_modules/.bin/gulp build-translations

+NODE_ENV=production ./node_modules/.bin/webpack

# Icons

script/update_mdi.py

./node_modules/.bin/gulp compress

-# Stub the service worker

-touch $OUTPUT_DIR/service_worker.js

-touch $OUTPUT_DIR_ES5/service_worker.js

-

# Generate the __init__ file

echo "VERSION = '`git rev-parse HEAD`'" >> $OUTPUT_DIR/__init__.py

echo "CREATED_AT = `date +%s`" >> $OUTPUT_DIR/__init__.py

echo "VERSION = '`git rev-parse HEAD`'" >> $OUTPUT_DIR_ES5/__init__.py

echo "CREATED_AT = `date +%s`" >> $OUTPUT_DIR_ES5/__init__.py

-# Generate the MD5 hash of the new frontend

-./node_modules/.bin/gulp gen-index-html

-./node_modules/.bin/gulp gen-index-html-es5

+# Generate index.htmls with the MD5 hash of the builds

+./node_modules/.bin/gulp \

+ gen-index-html gen-index-html-es5 \

+ gen-authorize-html gen-authorize-html-es5

+

diff --git a/script/develop b/script/develop

index 5b22330206..488f7b6b89 100755

--- a/script/develop

+++ b/script/develop

@@ -5,16 +5,22 @@

set -e

OUTPUT_DIR=hass_frontend

+OUTPUT_DIR_ES5=hass_frontend_es5

-rm -rf $OUTPUT_DIR

+rm -rf $OUTPUT_DIR $OUTPUT_DIR_ES5

cp -r public $OUTPUT_DIR

+mkdir $OUTPUT_DIR_ES5

+# Needed in case frontend repo installed with pip3 install -e

+cp -r public/__init__.py $OUTPUT_DIR_ES5/

-./node_modules/.bin/gulp build-translations authorize authorize-es5

+./node_modules/.bin/gulp build-translations

+cp src/authorize.html $OUTPUT_DIR

+

+# Manually copy over this file as we don't run the ES5 build

+# The Hass.io panel depends on it.

+cp node_modules/@webcomponents/webcomponentsjs/custom-elements-es5-adapter.js $OUTPUT_DIR_ES5

# Icons

script/update_mdi.py

-# Stub the service worker

-touch $OUTPUT_DIR/service_worker.js

-

./node_modules/.bin/webpack --watch --progress

diff --git a/src/authorize.html b/src/authorize.html

index 98d0b9368e..65f40c2edd 100644

--- a/src/authorize.html

+++ b/src/authorize.html

@@ -8,19 +8,15 @@

Loading

-

+