diff --git a/source/_posts/2023-08-02-release-20238.markdown b/source/_posts/2023-08-02-release-20238.markdown

index 2f39d7b4fb8..3c3f17be2f4 100644

--- a/source/_posts/2023-08-02-release-20238.markdown

+++ b/source/_posts/2023-08-02-release-20238.markdown

@@ -207,83 +207,47 @@ could ask Home Assistant to generate an image using your voice...

## Wildcard support for sentence triggers

-{% details "TODO" %}

+[Sentence triggers](/docs/automation/trigger/#sentence-wildcards) now support

+wildcards! This means you can now partially match a sentence, and use the

+matched part in your actions. This is what powers the above

+[shopping list feature](#add-items-to-your-shopping-list-using-assist)

+too, and it can be used for many other things as well.

-- Improve/extend story

-- Proof read/spelling/grammar

-- Replace screenshot

+You could, for example, create a sentence trigger that matches when you say:

-Sources:

+> Play the white album by the Beatles

-- https://github.com/home-assistant/core/pull/97236

-- https://github.com/home-assistant/home-assistant.io/pull/28332

+Using the wildcard support, you can get the album and artist name from the spoken

+sentence and use those in your actions. To trigger on the above example sentence,

+you would use the following command in your sentence trigger:

-{% enddetails %}

+ -⚠️ **This is pending a final review and might not make it into the release.**

+This will make the `album` and `artist` available as trigger variables that you

+can use in your actions; for example, to start playing the music requested.

-Sentence triggers now support wildcards! This means you can now partially match

-a sentence, and use the matched part in your actions. This is what powers the

-above shopping list feature too and it can be used for many other things as well.

+These wildcards are interesting and open up a lot of possibilities!

+[Read more about sentence triggers in our documentation](/docs/automation/trigger/#sentence-trigger).

-You could for example, create a sentence trigger that matches when you say:

+[JLo] realized he could use these wildcards, combined with the new

+[generate image service](#generate-an-image-with-openais-dall-e), to let

+Home Assistant generate an image and show it on his Chrome Cast-enabled device

+just by using his voice! 😎 You could ask it:

-> Play the white album by the beatles

+> Show me a picture of an astronaut riding an unicorn!

-Using the wildcard support, you can actually get the album and artist name

-from the sentence, and use that in your actions.

+He put this together in an automation blueprint, which you can use to do the

+same thing in your own Home Assistant instance:

-Sentence trigger; `Play {album} by {artist}`

+

-⚠️ **This is pending a final review and might not make it into the release.**

+This will make the `album` and `artist` available as trigger variables that you

+can use in your actions; for example, to start playing the music requested.

-Sentence triggers now support wildcards! This means you can now partially match

-a sentence, and use the matched part in your actions. This is what powers the

-above shopping list feature too and it can be used for many other things as well.

+These wildcards are interesting and open up a lot of possibilities!

+[Read more about sentence triggers in our documentation](/docs/automation/trigger/#sentence-trigger).

-You could for example, create a sentence trigger that matches when you say:

+[JLo] realized he could use these wildcards, combined with the new

+[generate image service](#generate-an-image-with-openais-dall-e), to let

+Home Assistant generate an image and show it on his Chrome Cast-enabled device

+just by using his voice! 😎 You could ask it:

-> Play the white album by the beatles

+> Show me a picture of an astronaut riding an unicorn!

-Using the wildcard support, you can actually get the album and artist name

-from the sentence, and use that in your actions.

+He put this together in an automation blueprint, which you can use to do the

+same thing in your own Home Assistant instance:

-Sentence trigger; `Play {album} by {artist}`

+ -This will make `album` and `artist` available as trigger variables that you

-can use in your actions; e.g., to start playing the music requested. Or maybe,

-you could even ask to display an AI generated image on your TV using a prompt...

+Provide the sentence you like to trigger on and the media player you want to

+show the image at, and you're good to go! You can import his blueprint using

+the My Home Assitant button below:

-## Generate an image with OpenAI's DALL-E

+{% my blueprint_import badge blueprint_url="https://www.home-assistant.io/blueprints/blog/2023-07/cast_dall_e.yaml" %}

-{% details "TODO" %}

-

-- Improve/extend story

-- Proof read/spelling/grammar

-- Replace screenshot

-

-Sources:

-

-- https://github.com/home-assistant/core/pull/97018

-

-{% enddetails %}

-

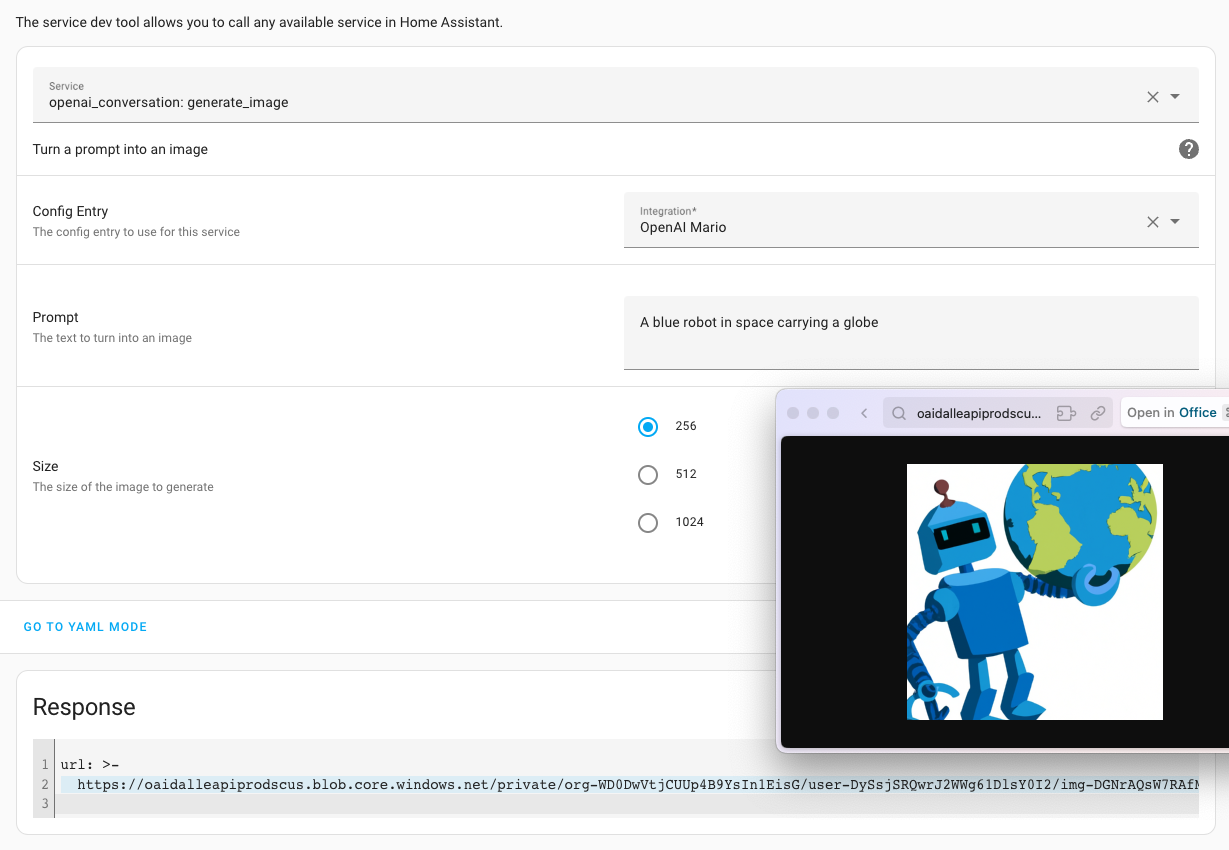

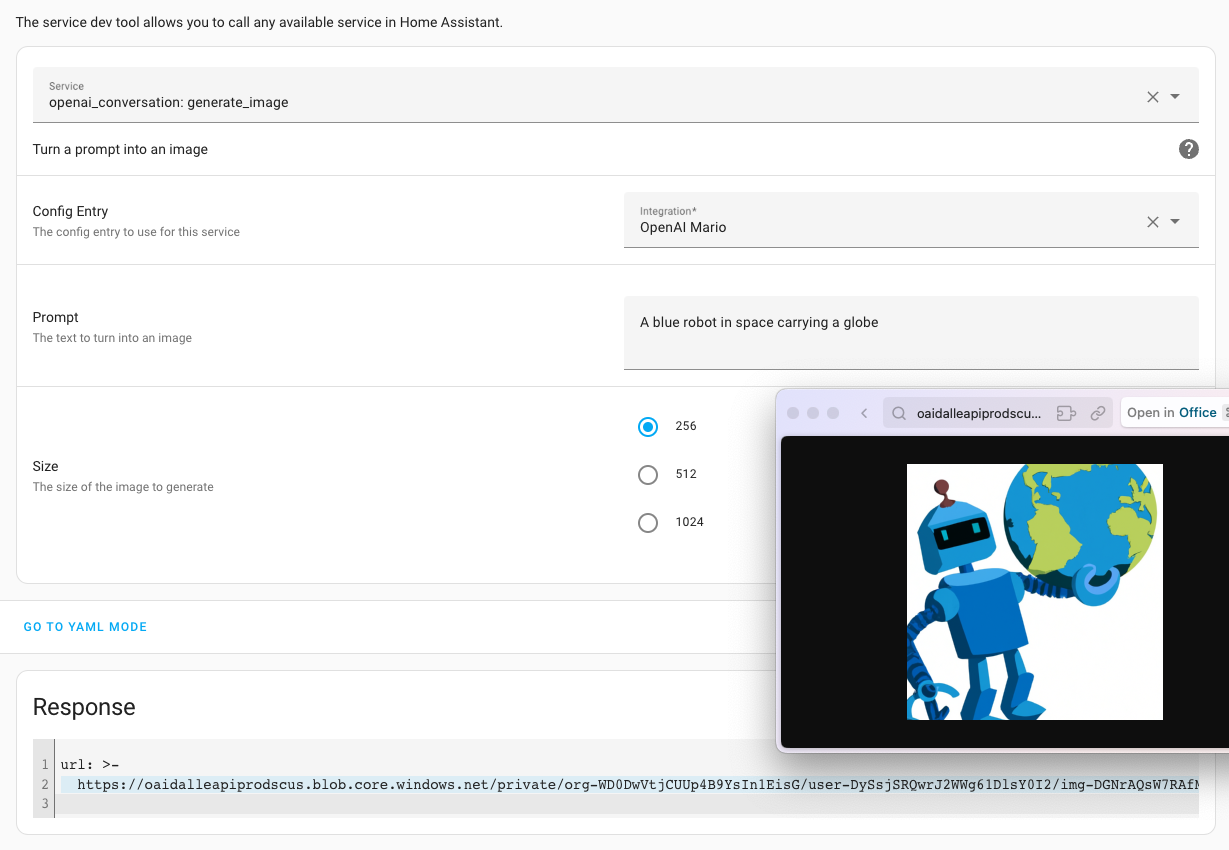

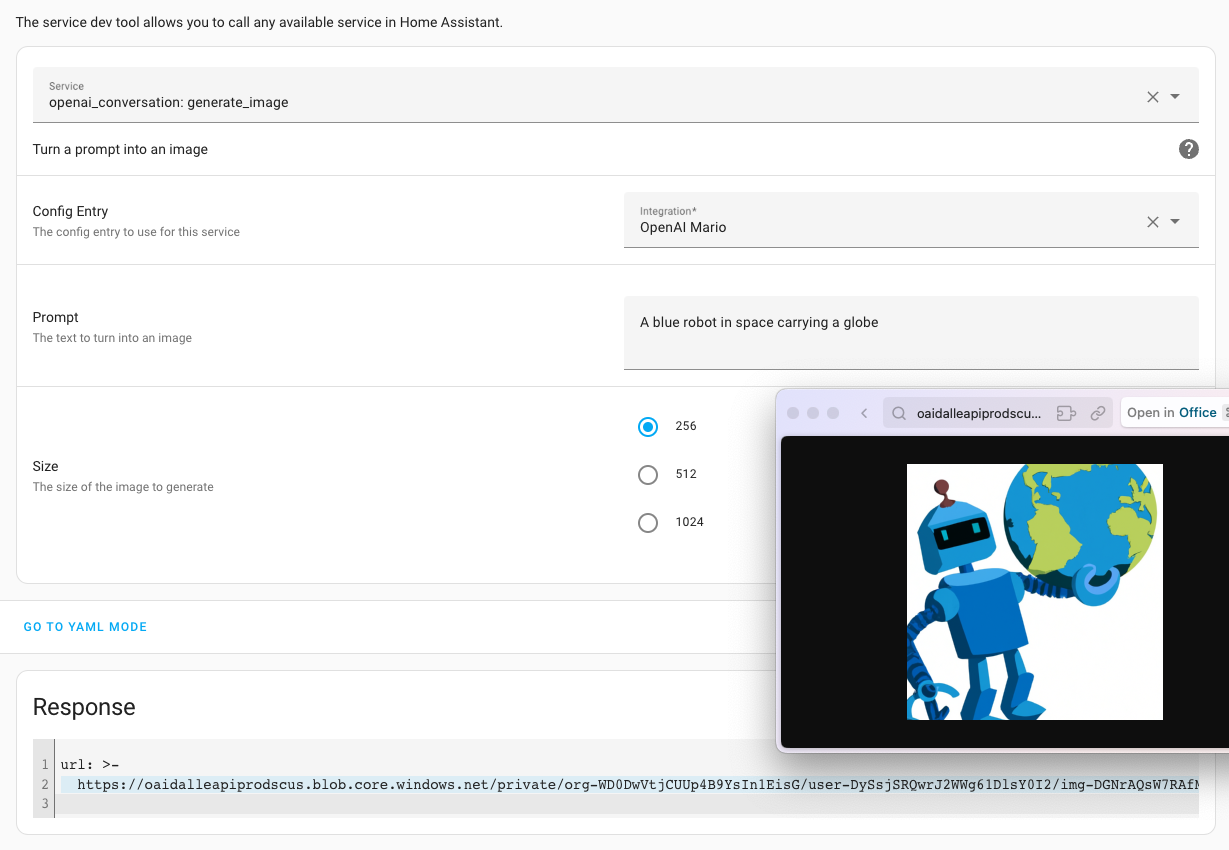

-In the last release, we added the ability for service to respond with data,

-and now we added a service that allows you to generate an image using

-[OpenAI's DALL-E](https://openai.com/dall-e-2).

-

-All you need is having the [OpenAI conversation agent](/integrations/openai_conversation)

-integration set up on your instance, and you will get a new service:

-{% my developer_call_service service="openai_conversation.generate_image" %}.

-

-

-Call this service describing the image you'd like the AI to generate, and

-it will respond with an image URL you can use in your automations.

-

-

-This will make `album` and `artist` available as trigger variables that you

-can use in your actions; e.g., to start playing the music requested. Or maybe,

-you could even ask to display an AI generated image on your TV using a prompt...

+Provide the sentence you like to trigger on and the media player you want to

+show the image at, and you're good to go! You can import his blueprint using

+the My Home Assitant button below:

-## Generate an image with OpenAI's DALL-E

+{% my blueprint_import badge blueprint_url="https://www.home-assistant.io/blueprints/blog/2023-07/cast_dall_e.yaml" %}

-{% details "TODO" %}

-

-- Improve/extend story

-- Proof read/spelling/grammar

-- Replace screenshot

-

-Sources:

-

-- https://github.com/home-assistant/core/pull/97018

-

-{% enddetails %}

-

-In the last release, we added the ability for service to respond with data,

-and now we added a service that allows you to generate an image using

-[OpenAI's DALL-E](https://openai.com/dall-e-2).

-

-All you need is having the [OpenAI conversation agent](/integrations/openai_conversation)

-integration set up on your instance, and you will get a new service:

-{% my developer_call_service service="openai_conversation.generate_image" %}.

-

-

-Call this service describing the image you'd like the AI to generate, and

-it will respond with an image URL you can use in your automations.

-

-

- -Temporary screenshot.

-

-Temporary screenshot.

-

-

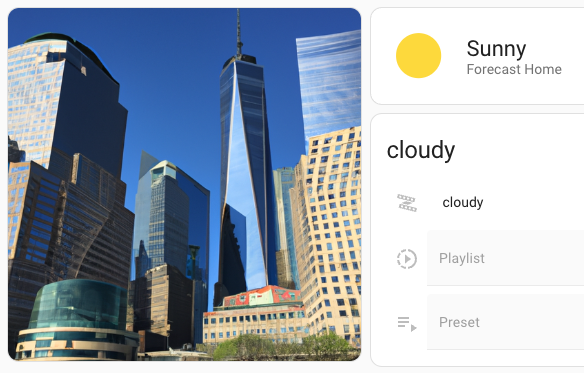

-You could, for example use this to generate an image of a city that matches

-the weather conditions outside of your home, let the AI generate an image

-about the latest new headline to show on your dashboard, or maybe a nice

-random piece of abstract art to show on your TV.

-

-

- -Temporary screenshot. AI generated image of New York based on the current weather state.

-

-Temporary screenshot. AI generated image of New York based on the current weather state.

-

-

-But if you combine it with the wildcard support for sentence triggers, you

-could even ask Home Assistant to generate an image for you by using your voice!

-

-{% my developer_call_service badge service="openai_conversation.generate_image" %}

+[JLo]: https://github.com/jlpouffier

## Condition selector

diff --git a/source/blueprints/blog/2023-08/cast_dall_e.yaml b/source/blueprints/blog/2023-08/cast_dall_e.yaml

new file mode 100644

index 00000000000..9bbf71d78d1

--- /dev/null

+++ b/source/blueprints/blog/2023-08/cast_dall_e.yaml

@@ -0,0 +1,72 @@

+blueprint:

+ name: Cast Dall-E generated images

+ description: |

+ Generate an image using your voice and show it on a screen.

+ Requirements:

+ - OpenAI Conversation configured

+ - A Cast-compatible media player

+ domain: automation

+ author: JLo

+ homeassistant:

+ min_version: 2023.7.99

+ input:

+ assist_command:

+ name: Assist Command

+ description: |

+ The Assist command you will use to generate the picture.

+ You can change the overall sentence to match your style and language.

+ **WARNING** you **MUST** include `{prompt}` in order to pass that variable to OpenAI.

+ default: "Show me a picture of {prompt}"

+ selector:

+ text:

+ open_ai_generation_size:

+ name: Image Size (px)

+ description: "Note: Bigger images take more time to generate"

+ default: "512"

+ selector:

+ select:

+ options:

+ - "256"

+ - "512"

+ - "1024"

+ open_ai_config_entry:

+ name: OpenAI Configuration

+ description: The OpenAI configuration entry to generate the image

+ selector:

+ config_entry:

+ integration: "openai_conversation"

+ media_player:

+ name: Media player

+ description: Media player to show the picture

+ selector:

+ entity:

+ filter:

+ integration: "cast"

+ domain: "media_player"

+ additional_conditions:

+ name: Additional conditions

+ description: |

+ Extra conditions you may want to add to this automation

+ (Example: Home occupied, TV on, etc)

+ default: []

+ selector:

+ condition:

+

+trigger:

+ - platform: conversation

+ command: !input assist_command

+condition: !input additional_conditions

+action:

+ - service: openai_conversation.generate_image

+ data:

+ size: !input open_ai_generation_size

+ config_entry: !input open_ai_config_entry

+ prompt: "{{trigger.slots.prompt}}"

+ response_variable: generated_image

+ - service: media_player.play_media

+ data:

+ media_content_type: image/jpeg

+ media_content_id: "{{generated_image.url}}"

+ target:

+ entity_id: !input media_player

+mode: single

diff --git a/source/images/blog/2023-08/assist-wildcard-dall-e-blueprint.png b/source/images/blog/2023-08/assist-wildcard-dall-e-blueprint.png

new file mode 100644

index 00000000000..0c8c494c810

Binary files /dev/null and b/source/images/blog/2023-08/assist-wildcard-dall-e-blueprint.png differ

diff --git a/source/images/blog/2023-08/sentence-trigger-wildcard-music.png b/source/images/blog/2023-08/sentence-trigger-wildcard-music.png

new file mode 100644

index 00000000000..d099ac78b77

Binary files /dev/null and b/source/images/blog/2023-08/sentence-trigger-wildcard-music.png differ

-Temporary screenshot.

-

-Temporary screenshot.

- -⚠️ **This is pending a final review and might not make it into the release.**

+This will make the `album` and `artist` available as trigger variables that you

+can use in your actions; for example, to start playing the music requested.

-Sentence triggers now support wildcards! This means you can now partially match

-a sentence, and use the matched part in your actions. This is what powers the

-above shopping list feature too and it can be used for many other things as well.

+These wildcards are interesting and open up a lot of possibilities!

+[Read more about sentence triggers in our documentation](/docs/automation/trigger/#sentence-trigger).

-You could for example, create a sentence trigger that matches when you say:

+[JLo] realized he could use these wildcards, combined with the new

+[generate image service](#generate-an-image-with-openais-dall-e), to let

+Home Assistant generate an image and show it on his Chrome Cast-enabled device

+just by using his voice! 😎 You could ask it:

-> Play the white album by the beatles

+> Show me a picture of an astronaut riding an unicorn!

-Using the wildcard support, you can actually get the album and artist name

-from the sentence, and use that in your actions.

+He put this together in an automation blueprint, which you can use to do the

+same thing in your own Home Assistant instance:

-Sentence trigger; `Play {album} by {artist}`

+

-⚠️ **This is pending a final review and might not make it into the release.**

+This will make the `album` and `artist` available as trigger variables that you

+can use in your actions; for example, to start playing the music requested.

-Sentence triggers now support wildcards! This means you can now partially match

-a sentence, and use the matched part in your actions. This is what powers the

-above shopping list feature too and it can be used for many other things as well.

+These wildcards are interesting and open up a lot of possibilities!

+[Read more about sentence triggers in our documentation](/docs/automation/trigger/#sentence-trigger).

-You could for example, create a sentence trigger that matches when you say:

+[JLo] realized he could use these wildcards, combined with the new

+[generate image service](#generate-an-image-with-openais-dall-e), to let

+Home Assistant generate an image and show it on his Chrome Cast-enabled device

+just by using his voice! 😎 You could ask it:

-> Play the white album by the beatles

+> Show me a picture of an astronaut riding an unicorn!

-Using the wildcard support, you can actually get the album and artist name

-from the sentence, and use that in your actions.

+He put this together in an automation blueprint, which you can use to do the

+same thing in your own Home Assistant instance:

-Sentence trigger; `Play {album} by {artist}`

+ -This will make `album` and `artist` available as trigger variables that you

-can use in your actions; e.g., to start playing the music requested. Or maybe,

-you could even ask to display an AI generated image on your TV using a prompt...

+Provide the sentence you like to trigger on and the media player you want to

+show the image at, and you're good to go! You can import his blueprint using

+the My Home Assitant button below:

-## Generate an image with OpenAI's DALL-E

+{% my blueprint_import badge blueprint_url="https://www.home-assistant.io/blueprints/blog/2023-07/cast_dall_e.yaml" %}

-{% details "TODO" %}

-

-- Improve/extend story

-- Proof read/spelling/grammar

-- Replace screenshot

-

-Sources:

-

-- https://github.com/home-assistant/core/pull/97018

-

-{% enddetails %}

-

-In the last release, we added the ability for service to respond with data,

-and now we added a service that allows you to generate an image using

-[OpenAI's DALL-E](https://openai.com/dall-e-2).

-

-All you need is having the [OpenAI conversation agent](/integrations/openai_conversation)

-integration set up on your instance, and you will get a new service:

-{% my developer_call_service service="openai_conversation.generate_image" %}.

-

-

-Call this service describing the image you'd like the AI to generate, and

-it will respond with an image URL you can use in your automations.

-

-

-This will make `album` and `artist` available as trigger variables that you

-can use in your actions; e.g., to start playing the music requested. Or maybe,

-you could even ask to display an AI generated image on your TV using a prompt...

+Provide the sentence you like to trigger on and the media player you want to

+show the image at, and you're good to go! You can import his blueprint using

+the My Home Assitant button below:

-## Generate an image with OpenAI's DALL-E

+{% my blueprint_import badge blueprint_url="https://www.home-assistant.io/blueprints/blog/2023-07/cast_dall_e.yaml" %}

-{% details "TODO" %}

-

-- Improve/extend story

-- Proof read/spelling/grammar

-- Replace screenshot

-

-Sources:

-

-- https://github.com/home-assistant/core/pull/97018

-

-{% enddetails %}

-

-In the last release, we added the ability for service to respond with data,

-and now we added a service that allows you to generate an image using

-[OpenAI's DALL-E](https://openai.com/dall-e-2).

-

-All you need is having the [OpenAI conversation agent](/integrations/openai_conversation)

-integration set up on your instance, and you will get a new service:

-{% my developer_call_service service="openai_conversation.generate_image" %}.

-

-

-Call this service describing the image you'd like the AI to generate, and

-it will respond with an image URL you can use in your automations.

-

- -Temporary screenshot.

-

-Temporary screenshot.

- -Temporary screenshot. AI generated image of New York based on the current weather state.

-

-Temporary screenshot. AI generated image of New York based on the current weather state.

-